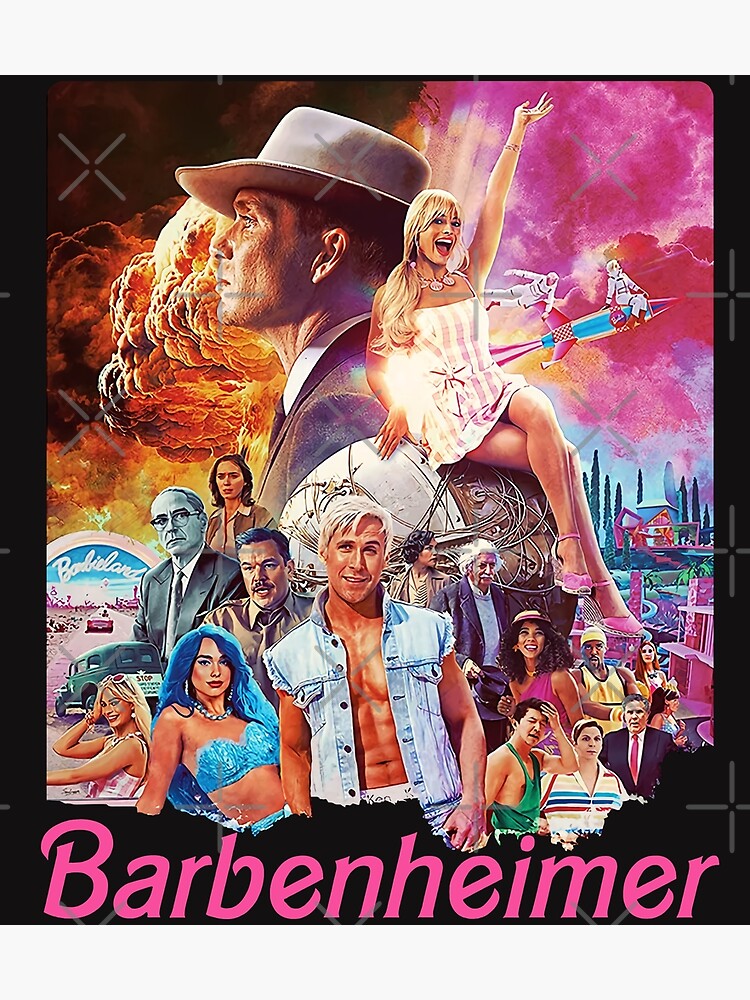

Finally! A really good year for movies. Box office was up and people showed they’d turn out for movies they really liked. Of course this is in large part because of two movies that, in lucky happenstance, premiered the same week. Apparently, this wasn’t the plan but the anticipation, critical approval, and popularity of “Barbie” and “Oppenheimer” resulted in the two films reinforcing each other. I know I wasn’t the only one who saw them back to back. And not only were they popular, they were GOOD.

The films that were not so good and not so popular were the big franchise movies, especially of the superhero variety. Good riddance to the Marvel Cinematic Universe. Don’t let the door hit you on the way out. And while I did enjoy the latest Mission Impossible movie, I’m not sure I will bother to see its sequel and find out how it ends.

So here’s hoping that Barbenheimer will convince Hollywood to take more chances on original stories, reward creativity and not take the audience for granted. With that as a preface, I saw 25 movies last year and here they are rated from best to worst.

1. Barbie

Yes, I think Barbie was a better movie than Oppenheimer, and not because I’m a sap for message movies. By reversing the gender power dynamic, it showed how clueless a privileged class can be (the women in Barbieland are no more sensitive to the feelings of the opposite sex than your your standard Lax Bro). More important “Barbie” teaches what it means to be human; Barbieland is a feminist Eden, but like Eve before her, Stereotypical Barbie wants knowledge, even if means accepting pain, and ultimately death.

2. Oppenheimer

I was lucky enough to see this movie in an IMAX theater and it was a remarkable, enthralling experience. I saw it again online and it was almost as good (being able to watch a second time with subtitles was a big help). Christopher Nolan is one of the last Great Event filmmakers and he really delivered. It’s a sprawling story about the making of the atomic bomb and the subsequent implications for Robert Oppenheimer, who oversaw the bomb’s development. (By the way, for those of you who listen to podcasts, check out the episode of my American presidents podcast, “The Celluloid President,” in which we discuss “Oppenheimer.” You can access it here.)

3. Past Lives

What claim does someone you loved as a child, a teen, or a young adult have on you when you become a mature adult? That’s the question at the center of this very moving and thought-provoking movie about childhood sweethearts in Korea who separate and then reconnect 12 years later and 12 years after that. They still have a hold on each other even though their lives are completely different. Now what?

4. Living

Technically this lovely film is from 2022 but I couldn’t see it until it appeared on Prime in mid-2023. Set in a 1953 British Public Works office, this is NOTHING like the TV show “Parks and Rec.” Bill Nighy plays a formidable, stiff-upper-lip department chief who is as far removed from Ron Swanson as you can get. Almost everyone in the department is scared of him, and since he can’t really relate to his family, he has no emotional resources to fall back upon when he gets a fatal cancer diagnosis. Having rarely if every experienced joy, he finds a way to leave a real legacy.

5. American Fiction

The funniest movie of the year. A Black author with literary aspirations is so put off by the public’s preference for racially stereotyped writers and the efforts of white would-be allies to absolve themselves of their guilty feelings that he pens an over-the-top fake memoir (“My Pofology”) that unexpectedly becomes a massive best-seller. He’s also got a messy personal life that requires some attention, too.

6. Anatomy of a Fall

Did she or didn’t she? A French movie about a successful novelist who is arrested and charged with murder when her husband is found dead from a fall from the third floor of their ski chalet. Their young son is a key witness who wants to save Mom from jail, but even he’s not sure if she’s innocent. Very absorbing courtroom drama and a fascinating look into the French justice system, which is very different from ours.

7. Air

It’s been quite a year for Matt Damon. He was the funniest character in “Oppenheimer,” and then he starred in “Air” as the real-life guy who saved Nike by signing Michael Jordan as the face of the company. This is a very enjoyable, supposedly true story, with Ben Affleck as Nike founder Phil Knight, and Viola Davis as MJ’s mother. In addition to providing insight into how the sports marketing business works (or used to work), it’s also a great vessel for early 80’s nostalgia.

8. The Holdovers

Speaking of nostalgia, the main kid in this movie seems to be the same age I was in the year during which this is set (early 1970’s). He’s attending a fancy prep school outside of Boston and is held-over at school during Christmas break in the care of a cantankerous unpopular teacher played by Paul Giamatti. They really bond during a trip to Boston, which looks very much like the grimy Boston I remember from those years. Terrifically written and acted.

9. Poor Things

Wow this is a weird movie. It’s like someone merged The Bride of Frankenstein with Alice in Wonderland and sprinkled in some soft-core porn. Set in a fantasy Victorian world where it’s possible to combine and reanimate parts of dead bodies, a mad scientist plants a baby’s brain into the body of a recent suicide and we watch her consciousness rapidly progress from that of a toddler’s to a mature woman who has a very health appetite for sexual pleasure. This is wildly imaginative and an impressive intellectual exercise, but a little difficult to warm up to.

10. Killers of the Flower Moon

The scariest moment of the year was when I looked at my watch two-and-a-half hours into this movie and realized there was still another hour left. This Martin Scorsese epic is movie-making at its ponderous best, dealing with complex moral issues through beautiful cinematography and great writing. I’d have ranked it higher except that both Leonardo DiCaprio and Robert DeNiro are way too old for their parts. This is especially true for Leo, who’s character is supposedly about 25 years old.

11. May December

Julianne Moore portrays a woman who, having been sent to prison for statutory rape after having sex with a young teen her son’s age, marries the kid when she gets out of jail. Twenty years later, Natalie Portman is an actress who shows up to shadow Juianne Moore because she’s playing her in a movie. Not surprisingly, she finds a strange family dynamic where the young husband is emotionally stunted and their kids are very confused, but maybe not as confused as the actress, who tries to figure out what’s going on behind the surface and ends up exploiting everyone. There is a lot to think abut here.

12. Maestro

This is a movie about the beginning and end (but not the middle) of Leonard Bernstein’s career as the most famous figure in American classical music, a genius who wants to do everything. He wants a glamorous wife and numerous male lovers on the side; he wants to write classical music and Broadway musicals; he wants to be on TV and also be taken seriously. Bradley Cooper does a tremendous job in embodying Bernstein’s many contradictions and probably should have been nominated for best director as well as best actor.

13. The Boys in the Boat

Practically a remake of Chariots of Fire, except that it’s about an American crew team in the 1936 Olympics instead of British runners during the 1924 games. There are no surprises but director George Clooney does such a good job depicting the desperation of these Depression-era athletes that it really works.

14. Golda

Helen Mirren is almost unrecognizable as Golda Meier, who was prime minister of Israel during the Yom Kippur War in 1973. It’s a very fascinating look at how the Israeli government works in a crisis, but the movie does presume a high degree of knowledge about the war’s timeline and its major players.

15. No Hard Feelings

Jennifer Lawrence is hired by the parents of a shy 19-year-old to “bring him out of his shell” (if you know what I mean) before he goes to Princeton and hi-jinx ensue. It’s very funny and very sweet if you can get over the ickiness of the parents paying a down-on-her-luck Long Island local to deflower their son.

16. Dumb Money

The protagonist of this movie if from my hometown of Brockton, Mass (where “no one here can kick our ass.” That’s actually a football chant.) Disappointingly, the movie was filmed in New Jersey and looks it. Based on a true story about the financial analyst, played by Paul Dano, who helped spur the GameStop stock mania of 2021 by touting it on Reddit. I remember it as a crazy story when it happened in real life because a whole lot of small day traders were able to bring a few short-sellers to their knees by buying an over-priced stock. Not mentioned in the movie, but after the protagonist made $20 million, he immediately moved out of Brockton!

17. Blackberry

Another true life business story, this time about the rise and fall of the Blackberry, which I still miss because my fat fingers make so many mistakes when I’m typing on an iPhone. This is one of those classic stories about a nerds/business shark marriage that works for a while and then goes astray because of hubris and incompatibility issues (and of course a superior competing product from Apple).

18. Mission Impossible: Dead Reckoning, Part 1

Very good action movie that, like “Top Gun 2” last year, was supposed to bring people back to the movie theater. Alas, Barbenheimer did that instead. I enjoyed it but as is often the case in movies that depend on thrilling chases to keep the audience’s attention, right now I can’t remember what the whole thing was about.

19. The Book of Clarence

This movie is as offbeat as they come and I don’t know who it is aimed at. It’s a little bit funny, but not funny enough to be a comedy like “The Life of Brian,” which it most closely resembles. It’s pretty religious but not enough to be taken seriously like “The Chosen,” which it resembles a little bit. The gist of the plot is that Clarence, the brother of the Apostle Thomas, is the bad seed of the family. As bad seeds do, he decides to pose as the Messiah for the perks of being the Christ, but after getting crucified himself realizes that Jesus is the real deal. There’s also a racial angle since all the Jews are played by Black actors and all the Romans are white.

20. Guardians of The Galaxy

Guardians of the Gallery has been the only Superhero movie franchise I have been able to stomach for the past ten years, and the saga ends with a satisfying denouement. Having said that, I think one, possibly two, episodes in this franchise would have been enough.

21. You Hurt My Feelings

This has to be one of the lowest-stakes movies ever. The conflict is right there in the title. A Manhattan husband doesn’t like his wife’s new novel but tells her he does, because as every husband knows, the wrong answer to the question “Do you like my novel?” can be ten times more devastating than “Do you like my dress?” But her feelings are hurt when she eavesdrops on him and finds out the truth. That’s the proximate justification for the movie, but the film is REALLY about the disgruntlement of middle-class New Yorkers who just aren’t very good at their jobs.

22. The Lost King

To like this movie it helps a lot if you care whether Richard III really killed his nephews in the Tower in 1484. This is the mostly true story about how Phillipa Langley, a depressed Englishwoman who really believes in Richard’s innocence (which I do NOT), was able to find his body buried under a parking lot in the town of Leicester.

23. American Symphony

The year 2021 seemed to be a very good one for Jon Batiste, perhaps best known as Stephen Colbert’s bandleader. He won all kinds of awards, including an Oscar and a couple of Grammys, and had a hit record. Regrettably, his wife, the NYT columnist Suleika Jaouad, learned that her Leukemia had returned and she needed bone marrow treatment. Also, Batiste was trying to write a major classical piece called American Symphony. This documentary film captures all of this in intimate detail and you can only marvel at the highs and lows that people can experience simultaneously.

24. Elemental

Can fire and water coexist? Can they form a romantic couple? Pixar wants to know. The ancient elements of earth, wind, fire and water (and no, this is not a based on the 1960s rock band) are cartoon ethnicities trying to live in harmony in this very imaginative movie. The movie is not shy about addressing issues like racial assimilation, the immigrant experience, etc. but still manages to be fun.

25. Indiana Jones and the Dial of Destiny

Was anyone asking for a fifth Indiana Jones movie? Not me. Harrison Ford looks pretty good for a guy his age. The movie begins in 1969 Greenwich Village, where Indy is being chased by Nazis (again!!!) who are trying to go back in time and change the end of World War II. There’s one excellent chase scene on a horse in the subway, but Indy is so crotchety now that the movies lacks its original zest.

26. Taylor Swift Eras Tour

I thought I’d check out what the Taylor Swift thing is all about and I mostly get in now that I’ve seen this concert film. I will say this — the single most thrilling moment of the year, cinematically, is when she dives into a previously unidentified hole in the stage. Whoa! As in many concert movies, your enjoyment depends to a large extent on how well you know the music. So I enjoyed it when I recognized the songs and was a bit bored when I didn’t. (Very impressive costume changes, though.)

27. Champions

Woody Harrelson is a talented but hotheaded basketball coach who is sentenced to community service coaching a basketball team with people with learning disabilities. This movie is very sweet but you can predict what’s going to happen. This movie is possibly listed lower than it should be, but the problem is that when I saw the title on my list of movies seen during the year I had to Google it to refresh my memory.

28. Good Grief

Dan Levy from “Schitt’s Creek” wrote, stars in and directed this story about an American in London who seems to have it all until his husband is killed in a car crash at Christmas. A year of unassuaged grieving occurs and things aren’t improved when he learns that the husband had a secret apartment in Paris where he entertained his secret boyfriend. Everyone in the movie has a lot of unresolved issues, which are eventually resolved through a series of confrontations and hard discussions.

29. Theater Camp

This an arch, semi-satirical look at the self-important counselors at a theatre camp who seem to think they are Broadway producers. It’s charming and funny for a while and then you wish everyone would just get a life.

30. You Are So Not Coming to My Bat Mitzvah

Adam Sandler has a producing deal with Netflix for which he knocks off one-to-two movies a year. This Bat Mitzvah movie is a family affair, though. Not only is he in it but his wife and daughters are too. The story revolves around the tween angst of wealthy, Jewish California girls who believe that their Bat Mitzvahs will be the crowning achievement of their lives — that and kissing a boy for the first time. The movie is a little bit funny, a little interesting but I am definitely not the target audience.

31. Are You there God? It’s Me Margaret

I am not the target audience for this movie either. It’s a dramatization of Judy Blume’s best-known novel, which takes a hard look at how difficult the tween years can be. I was surprised to see how significantly religion figures into the equation even though God is mentioned right there in the title. I respect the effort, and empathized with poor overly hormoned Margaret, but the movie gives off too much of made-for-TV vibe for me to care a lot.

32. 80 For Brady

Now this is a movie that was targeted for an audience of one: me. Four senior ladies in Boston find meaning in worshipping Tom Brady, travel to the Super Bowl and watch the greatest comeback in football history (the 34-28 win over the Atlanta Falcons in SB LI). But if you’ve ever watched Frankie and Gracie, you know the kind of antics that Jane Fonda and Lily Tomlin will pull (i.e. far-fetched). These antics are fine but not really my thing.

33. Eric Clapton: Across 24 Nights

In 1991 and 1992, Eric Clapton played 24 concerts at the Albert Hall and this documentary is a stitched together presentation of the highlights, with some of his greatest hits and some lesser known songs. With no narration or subtitles explaining who he’s playing with, this movie is really for super-fans (see Taylor Swift, above). Still, experiencing “Layla” played on the big screen is worth the price of admission.

34. Murder Mystery 2

Another Adam Sandler production for Netflix. I like him, I certainly like Jennifer Aniston, and I like the European backdrops, but this is the kind of thing you only stream when there are four people in the room who can’t agree on anything else to watch.

35. Beautiful Disaster

This is the only legitimately bad movie on the list, but that’s OK because we watched in advance of attending a live podcast called How Did This Get Made?, where they dissect really sub-par movies to hilarious effect. I’d summarize the plot for you but it’s just too ridiculous.